Introduction

Voice agents represent the next frontier in human-computer interaction, offering hands-free, conversational interfaces that can transform customer service, healthcare, education, and numerous other industries. As artificial intelligence advances, these voice-powered systems become increasingly sophisticated, capable of understanding complex queries, reasoning through problems, and responding with natural-sounding speech.

The landscape of voice agent technologies has evolved rapidly, with multiple approaches emerging to address different use cases and technical requirements. Organizations now face choices between building custom solutions with components like Speech-to-Text (STT), reasoning engines, and Text-to-Speech (TTS) systems, adopting real-time models from providers like OpenAI and Google, or leveraging specialized platforms such as Bland.ai, VAPI, and ElevenLabs. Each approach offers distinct advantages and limitations that must be carefully considered when designing voice-enabled systems.

This article examines the various architectural approaches to building voice agents, from modular frameworks to integrated solutions and third-party platforms. We will cover:

- Key Components of Voice Agents

- Technologies Involved

- Third-Party Providers

- Challenges and Limitations

Key Components of Voice Agents

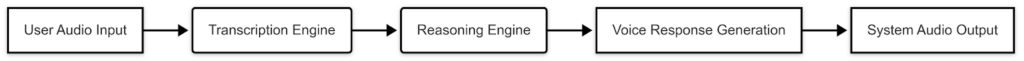

Modular Framework: STT → Reasoning → TTS

The modular approach to voice agents breaks down the interaction process into three distinct components:

- Speech-to-Text (STT): This component captures audio input and converts it to text. Providers like Google, Microsoft, and various open-source tools offer STT capabilities with different accuracy levels, language support, and latency profiles.

- Reasoning: Once speech is converted to text, AI models process this text to understand intent, extract information, and generate appropriate responses. This critical component can leverage large language models (LLMs) from providers like OpenAI (GPT models), Anthropic (Claude), Google (Gemini), or DeepSeek, as well as custom logic for specialized use cases.

- Text-to-Speech (TTS): The final component converts the text response into natural-sounding speech. TTS systems from Google, Amazon Polly, OpenAI, and others offer various voices, languages, and expressive capabilities.

This modular architecture allows for significant customization and optimization of individual components based on specific requirements. For example, a financial services application might use a specialized STT model trained on financial terminology, a reasoning layer incorporating compliance rules, and a TTS voice designed to convey trust and professionalism.

Real-time Models

Real-time models, such as OpenAI’s audio model, take a fundamentally different approach by processing audio input directly, without the distinct STT → Reasoning → TTS pipeline. These models can understand speech, process information, and generate spoken responses in a more integrated way, potentially reducing latency and creating more natural-sounding interactions.

When integrated with telephony providers like Twilio, these real-time models enable sophisticated voice agents to handle calls. The key advantage is their ability to dynamically adjust language, speaking rate, accent, and tone based on the conversation context, creating a more human-like interaction.

Third-party Platforms

Several specialized platforms have emerged to simplify the development and deployment of voice agents:

- Bland.ai: Focuses on controlling calling agents with an intuitive platform for setting up and managing outbound and inbound calls. It handles infrastructure challenges like scalability, recording, transcriptions, and call controls.

- ElevenLabs: Known for high-quality voice synthesis with emotional range and multilingual capabilities.

- VAPI: Offers a platform for creating voice agents without coding, with pre-built templates and integrations.

Technologies Involved

Twilio Streaming

Streaming capabilities become crucial for reducing latency when implementing the modular approach with Twilio. Streaming allows the STT component to process audio before the utterance is complete. The process works as follows:

- Twilio captures audio from the call and streams it in real time to the STT service

- As chunks of transcribed text become available, they are sent to the reasoning layer

- The reasoning layer can begin formulating responses while still receiving input

- TTS converts the responses to audio, which Twilio streams back to the caller

This streaming architecture reduces perceived latency by enabling faster turn-taking in conversations. However, it requires careful handling of partial results and potential corrections as the full context becomes available.

Real-time Models Integration

OpenAI’s and Google’s real-time audio models can be integrated with Twilio to create seamless voice experiences. Unlike the modular approach, these models:

- Process audio directly without separating STT/reasoning/TTS

- Support dynamic voice adjustments during the conversation

- Handle multi-turn dialogues with maintained context

- Process audio and generate responses with lower latency

However, this integrated approach requires sufficient computational resources and incurs higher costs (approximately $0.45/minute for OpenAI + Twilio).

Tool Usage in Voice Agents

Both architectural approaches can be enhanced through tool integration, allowing voice agents to:

- Access External Knowledge: Retrieve information from databases, knowledge bases, or the internet

- Perform Actions: Book appointments, place orders, update records, or trigger workflows

- Process Specialized Data: Analyze images, documents, or structured data

- Handle Complex Logic: Execute calculations, run simulations, or apply business rules

In the modular architecture, tools typically interface with the reasoning layer, extending the capabilities beyond what the AI model can do independently. For real-time models, tools can be integrated through function calling or API interfaces that work alongside the audio processing.

Third-Party Providers Deep Dive

Bland.ai

Bland.ai offers a comprehensive platform for deploying voice agents with minimal technical overhead. Key capabilities include:

- Scalability: Handles high call volumes with enterprise tier supporting up to 20,000 calls/hour and 100,000 calls/day

- Pricing: $0.09/minute, prorated to the exact second with billing only for actual call time

- Context Management: Supports dynamic variables for personalized conversations

- Call Controls: Provides recording, transcription, and comprehensive call management features

- Integration: Offers APIs for seamless integration with existing systems

- Development Experience: Simple setup for both inbound and outbound calls

Bland.ai is particularly well-suited for organizations needing a turnkey voice agent solution without managing complex infrastructure.

ElevenLabs

ElevenLabs stands out in the TTS space with industry-leading voice quality and expressivity. When integrated into voice agent architectures, ElevenLabs offers:

- Voice Cloning: The ability to create custom voices that match specific requirements

- Emotional Range: Support for various emotional tones in speech output

- Multilingual Capabilities: High-quality speech synthesis across numerous languages

- Voice Design: Tools for crafting the perfect voice for specific brand identities

ElevenLabs can be integrated into the modular architecture as the TTS component or used alongside other voice agent platforms.

VAPI

VAPI provides a platform focused on simplifying voice agent creation through:

- No-code Interface: Visual builder for creating voice workflows

- Pre-built Templates: Starting points for everyday use cases

- Twilio Integration: Built-in connectivity for phone calls

- Model Selection: Support for various AI models

- Knowledge Base Integration: Easy connection to company documents and information

VAPI is designed for users who need to create voice agents quickly without extensive technical expertise, though it comes with monthly fees in addition to Twilio and model costs.

Challenges and Limitations

Latency Issues

Voice interactions are highly sensitive to latency, with even slight delays disrupting the natural flow of conversation:

- Modular Architecture: Each processing step adds latency, with STT and TTS often being the most time-consuming components. Streaming implementations can mitigate this, but add complexity.

- Real-time Models: While generally faster, they face latency challenges, especially when handling complex queries or integrating with external tools.

- Network Considerations: Call quality and network conditions significantly impact user experience regardless of the architecture chosen.

Cost Factors

Voice agent implementations involve several cost considerations:

- Modular Approach: Requires paying for individual services (STT, LLM, TTS) plus Twilio charges, with costs varying based on usage volume.

- Real-time Models: Higher per-minute costs (e.g., $0.45/minute for OpenAI + Twilio) but potentially more straightforward implementation.

- Third-party Platforms: Often involve monthly fees plus usage costs, which can be less predictable for scaling operations.

Scalability Challenges

Different architectures present varying scalability considerations:

- Infrastructure Management: Real-time models with Twilio require careful infrastructure planning for high-volume scenarios.

- Rate Limits: API-based components often have rate limits (e.g., Bland.ai’s default 100 calls/day limit).

- Resource Allocation: High-concurrency voice systems need appropriate resource provisioning to maintain performance.

Accuracy and Understanding

All voice agent architectures struggle with the following:

- Accents and Dialects: Varying effectiveness across different speaker populations

- Background Noise: Degraded performance in noisy environments

- Complex Queries: Difficulty handling nuanced requests or domain-specific terminology

- Context Maintenance: Challenges in maintaining conversation context over more extended interactions

Conclusion

Voice agent technology represents a rapidly evolving field with multiple architectural approaches, each offering distinct advantages for different use cases. Organizations must carefully evaluate their specific requirements when choosing between:

- Modular STT → Reasoning → TTS Architecture: Offers maximum flexibility and customization, ideal for asynchronous messaging platforms and situations requiring specialized components. When integrated with Twilio streaming, this approach can deliver acceptable latency while maintaining the benefits of component selection.

- Real-time Models with Twilio: Provides more natural conversations with lower latency and dynamic voice capabilities, though at a higher cost. This approach shines in scenarios requiring fluid, human-like interactions where price is less critical than conversation quality.

- Third-party Platforms: Bland.ai offers comprehensive call management with reasonable pricing and enterprise scalability, while platforms like VAPI and ElevenLabs provide specialized capabilities for no-code development and premium voice quality, respectively.

The future of voice agents will likely see continued convergence of these approaches, with improvements in real-time processing making integrated models more affordable, while modular architectures become more streamlined. Organizations that understand both the technical and user experience implications of these architectural choices will be best positioned to implement effective voice agent solutions that meet their specific business objectives.

As voice interaction continues to become more prevalent across industries, the technical decisions made today in selecting and implementing voice agent architectures will significantly impact an organization’s ability to deliver natural, efficient, and valuable conversational experiences to its users. Contact us today to help you implement voice agent architectures with AI.