This post starts part 3 of our series “The Future of Healthcare is Here: Arionkoder’s Comprehensive Guide to AI in Medicine”. Stay tuned for more insights and practical tips from Arionkoder’s experts on harnessing the power of artificial intelligence in healthcare.

Artificial Intelligence (AI) projects in healthcare have the potential to revolutionize the industry and improve people’s lives. However, they can be complex and require rigorous, high-quality development processes to ensure success.

Capturing accurate requirements is critical to defining the project’s scope, limitations, and potential solutions. However, it is only after training and evaluating the machine learning (ML) components that the accuracy of these early assumptions can be determined.

According to the Capgemini Research Institute, approximately 85% of ML projects fail to meet their goals, despite strong operational support from their leaders. This highlights the importance of careful control in medical applications, where failures can have serious consequences for patients’ health and quality of life. Regulators such as the FDA and EMA have strict directives to prevent such failures, but these audits take place at the end of the project, when investments have already been made and the product is ready for deployment.

To mitigate risks, validate hypotheses, and identify potential issues early, AI projects often go through a Proof of Concept (PoC) study. In this article, we will explore the key elements of this development phase and how we approach it at Arionkoder.

What is a Proof of Concept (PoC)?

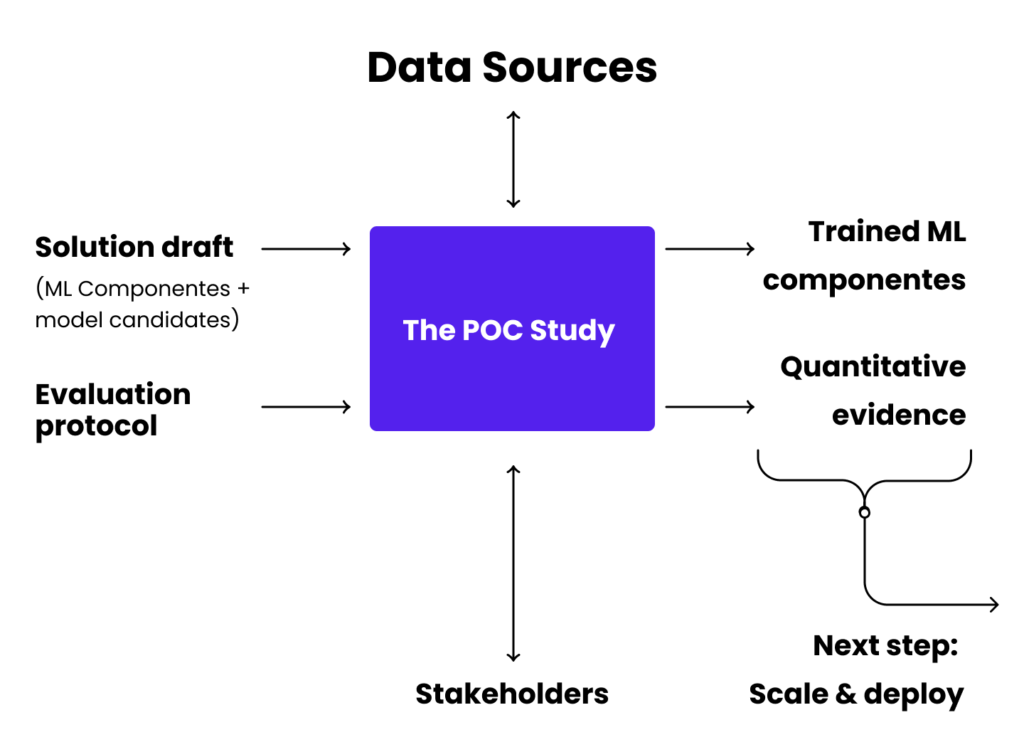

A PoC is a short-term, focused pilot study in which theories and assumptions made during solution design are tested through hands-on development. During a PoC, the major ML components of the AI solution are trained and validated using established data sources, without considering secondary elements of the solution. This allows for the confirmation of key technical decisions in just a few weeks and provides valuable quantitative information about the operational value of the AI solution before scaling to production or making further investments in its implementation.

The PoC is designed to validate critical hypotheses made during product design. It is like tasting a dish before serving it to diners: ingredients and their combinations may have been carefully selected, but it is not until the dish is prepared and tasted that one knows if the recipe is effective.

The PoC validates several assumptions, but the most critical are those related to data and models. This validation is not limited to a theoretical perspective, but instead is conducted in a practical and realistic scenario with a focus on accuracy.

The PoC evaluates the feasibility of the project with the given data quantity and quality provided by clients. Samples are analyzed to extract valuable insights, which are then used in the context of predefined ML tasks. Alternative ML algorithms are trained and compared to identify which are most suited for implementation and which are suboptimal, computationally expensive, or require too much data, etc.

All decisions are made using the evaluation protocol established during requirements gathering, providing our partners with a quick understanding of how the algorithms will perform in real-world scenarios and any potential risks and mitigation strategies.

The PoC as a vital step in successful AI projects in healthcare

The PoC enables you to assess the feasibility of your goals and determine if using AI is a worthwhile investment, without doing the entire thing.

With a PoC, you can test different algorithmic options for implementing the solution’s components, and verify the value of your data in a realistic scenario. This leads to the creation of an operational instance of the solution, with the embryonic forms of the ML components ready for deployment. This is achieved in a short period and with a significantly lower investment compared to the entire project.

Additionally, a PoC provides you with quantitative evidence of the solution’s effectiveness. For startups and small companies, this information is valuable when pitching ideas to investors. Instead of presenting a non-validated project, you can show the performance of your solution through numbers. This evidence can also be used to convince future users and clients to adopt your technology once it’s ready for use.

Finally, PoCs provide a great opportunity to work with our team and get to know how we work and communicate. Our clients can use this experience to decide if they want to continue working with us on the project or explore other options. (However, we’re confident that they’ll love working with us!).

Some key considerations when planning a PoC

Before embarking on a PoC, it is critical to address three core elements:

1. A clear, well-defined scope.

The purpose of a PoC in AI projects is to focus on the ML-related components, not to create the entire solution from scratch. Establishing the main objectives of the PoC at the outset is crucial in ensuring a streamlined process and aligning expectations. In the event that too many objectives are identified for a single PoC, it may be advisable to break it down into several smaller PoCs, each focused on training and evaluating individual ML components.

2. Data accessibility.

Conducting a PoC is not feasible if access to the data required for training and evaluating models is not readily available. This data could be stored in cloud storage, embedded in various sources and databases, or on a hard drive unit, but it must be accessible for exploration and use. If data extraction is challenging, it can be addressed as a separate PoC study before proceeding to training and evaluating the ML components.

3. Identifying the stakeholders

As evaluation is a key aspect of a PoC, it is important to report results to relevant stakeholders for analysis. To make the final determination of whether the ML components are ready for deployment, input from clients is essential. Knowing who these stakeholders are before starting the PoC is therefore a critical step.

Our PoC process for AI projects in healthcare

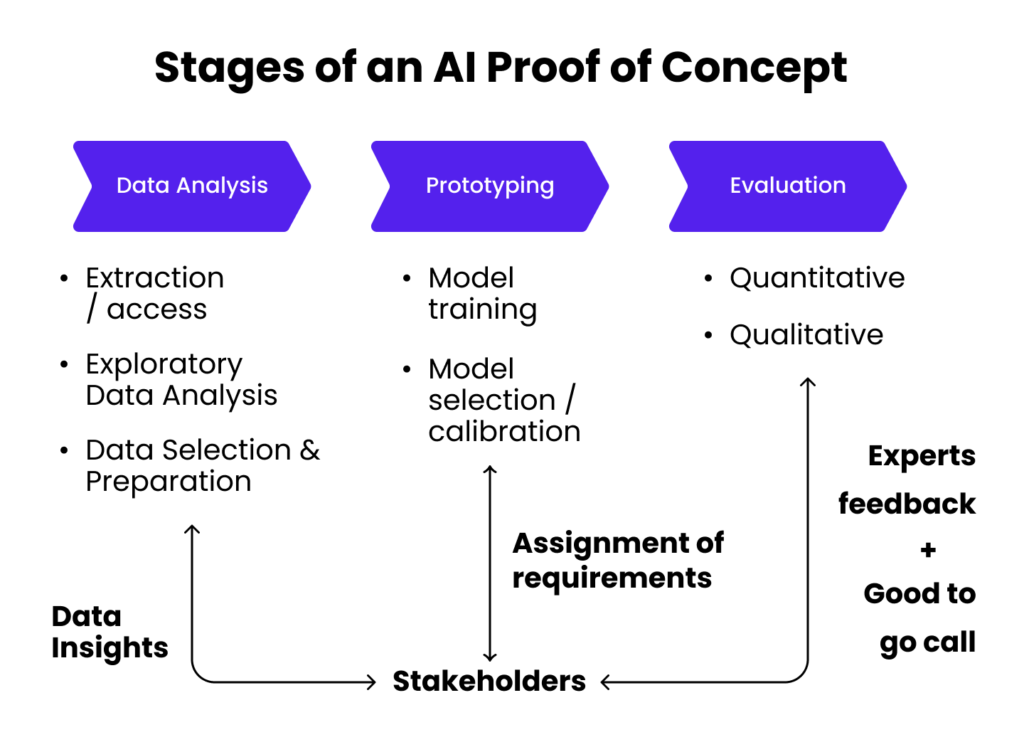

Our methodology to approach PoC studies for AI projects in healthcare consist of three major stages: data analysis, model prototyping, and model evaluation. Our strict adherence to defining the goals and overall scope of the PoC ensures that the entire process is completed within a couple of weeks, with each stage being completed after a few days.

During the data analysis phase, our goal is to access and understand the clinical data used for training and evaluating our algorithms. We extract insights from these samples by studying their distribution and properties in the context of the ML tasks, and provide constant feedback to stakeholders. Additionally, data is curated and prepared for training and evaluating the ML models, with appropriate pipelines defined and documented.

Upon accessing the data, we move on to prototyping the ML components. This involves training multiple model candidates, calibrating their parameters, and assessing their performance in a cross-validation setting. We maintain constant communication with stakeholders to ensure that the obtained outputs align with the original requirements.

Finally, we evaluate the trained ML components on a held-out portion of the available data to extract quantitative metrics and derive qualitative observations. Stakeholders provide feedback at this stage, confirming the overall performance and determining if the models are ready for scaling and deployment, thereby determining the success of the PoC.

Let’s delve into each of the stages:

Stage 1: Data analysis

The first stage of our process is data analysis. Our goal here is to access and understand the data required for the subsequent stages of the PoC. If the data extraction process is complex, we may split the PoC into two parts, with the first part focused on developing a reliable data extraction pipeline and the second part focused on using the extracted data for training and evaluating the ML components.

We prepare scripts for extracting clinical data from databases or medical devices as needed, depending on the scope of the PoC. Then, we perform Exploratory Data Analysis (EDA) to extract statistical information from the datasets in the context of the ML tasks. During EDA, we examine the distribution of the samples, measure the heterogeneity of the populations, and assess the overall quality of the data. This information enables us to determine the suitability of the data for the subsequent steps and provides valuable insights to the stakeholders, potentially uncovering new business opportunities for other AI-based solutions.

Finally, we use the information collected during the EDA to select valid samples, partition the data into training, validation, and test sets, and prepare the data for use by the ML models. This includes normalizing features, preprocessing images, and associating multimodal samples, as needed. The main outputs of this stage are data preparation pipelines and reports documenting the sample selection criteria and key statistics associated with the processed data.

Stage 2. Model prototyping

In this stage, we utilize the data that was collected and preprocessed in the previous phase to train ML models. To do so, we select state-of-the-art algorithms that align with the solution’s requirements, and individually train them using appropriate configurations.

To optimize the models’ parameters, we either use standard search strategies such as grid or random search, or leverage AutoML solutions to automate the process. In cases where the number of parameters is too extensive to be covered within the PoC timeframe, a subset of them is selected for study and their influence is documented in the final report.

To prevent overestimating the actual performance of the model, all models are evaluated using appropriate metrics and compared using cross-validation or separate data. The best-performing models are selected based on this evaluation and their results are discussed with stakeholders to ensure they meet the functional and non-functional requirements. Additionally, they play a crucial role in providing feedback on the format of the outputs and the computational time of the models.

The deliverables of this stage include the trained ML models and a report documenting the discarded solutions, their performance, and opportunities for improvement.

Stage 3. Evaluation

In this stage, we assess the performance of the trained ML models by applying the pre-defined evaluation protocols. The aim is to obtain both quantitative and qualitative results that demonstrate the overall performance achieved during the PoC.

This stage is critical as it allows us to determine the viability of the model in a real-life production environment. If there were any limitations or shortcomings in the initial solution design, the evaluation stage provides early evidence of these, thereby avoiding costly investments in scaling and deployment.

By presenting and discussing the results with stakeholders, we can identify potential areas for improvement in terms of model development and data collection, or even reevaluate the final use case and overall solution design.

The main outputs of the evaluation stage include reports documenting the performance on the held-out data set, identifying any risks in the solution, and proposing hypotheses for mitigating their effects.

Finally, it’s important to keep in mind that the PoC process is often iterative and incremental. Depending on the predefined scope, we may start by accessing a small, representative sample of data to train a single model, run it on a test set, and iteratively refine the process. This collaborative effort allows both the client and our team to better understand the problem and its specific requirements. However, deadlines in a PoC are usually strict and the results are reported as they stand once the timeframe has expired. If necessary, a subsequent PoC can be planned with a new set of objectives and scope.

What comes next after the PoC

Upon completion of the PoC, we will have obtained essential data extraction and preparation routines, a collection of well-documented pre-trained ML models, and a quantitative and qualitative evaluation of their performance in held-out datasets. The subsequent steps to take will depend on the accuracy and reliability of these prototypes.

If the results meet the quality threshold and goals defined at the outset of the PoC, the client has the option to package the models into software and scale them for production. This involves creating APIs and microservices infrastructure for predictions, developing a user interface for model interaction, and deploying them in the cloud or on dedicated servers. Typically, this involves expanding the development team from a small group of Data Scientists and Machine Learning engineers to a larger, more software-focused team including front-end and back-end developers, QA analysts, and Project Managers. Retaining the Data Scientists who were involved in the PoC is a plus, as they already have expertise in the product and can provide valuable insights to the development team.

If the results are good but not sufficient for a “go-to-market” decision, a retrospective PoC may be performed to determine the sources of failure and implement corrective measures. This would involve taking the outputs and deliverables of the initial PoC and trying to improve them by collecting additional data, refining the data extraction and preparation processes, or modifying the ML prototypes. This requires following the same PoC procedure with a more focused scope to attain the desired results.

Finally, if the results are significantly off target, it may indicate that the initial idea was infeasible. Although the reasons for failure can vary from project to project and are challenging to generalize across all healthcare initiatives, they are often related to domain-specific limitations, such as an under-explored medical scenario or a complex problem, or difficulties with data, such as having too few samples or samples with quality issues. In some cases, correcting these issues may be too challenging or costly, requiring the idea to be discarded after the PoC. However, this scenario is rare, as ill-posed projects are often identified early on through our in-depth requirements engineering stage. Nevertheless, failure is always a possibility when conducting a PoC and it serves as a valuable method to avoid pursuing an infeasible project.

To conclude…

PoC studies are a crucial step in AI projects in healthcare, as they enable us to evaluate different ML solutions in a realistic environment. Through PoCs, we gain access to well-documented ML models that have been trained to solve specific tasks and provide performance data that allows for smoother scaling. This is essential for ensuring that valuable resources, such as time and money, are not wasted at an early stage of the project.

At Arionkoder, we have the expertise and team to deliver successful AI PoCs in record time for medical applications. Our support empowers start-ups and healthcare providers to create MVPs quickly and secure the evidence needed to attract investors. If you’re interested in embarking on this journey with us, don’t hesitate to reach out. We’re here to help and make sure your AI project in healthcare is a success.

Don’t miss out on our future articles, where we will delve deeper into the topic of AI in healthcare and how it’s revolutionizing the industry. Stay tuned!