Intro

Generative AI can be a game-changer for experimentation, especially in accelerating the creation of functional prototypes. In our exploration of designing AI agents for the healthcare field, we aimed to test whether Generative AI tools could take Figma prototypes and transform them into front-end code. If this approach proves viable, it could significantly streamline the early stages of development, reducing the need for extensive manual effort from front-end developers.

However, the journey wasn’t straightforward. Gen AI tools may seem promising, but they don’t always fit neatly into a compliant or user-friendly workflow. Similarly, while Figma plugins offer some automation, they pale in comparison to the flexibility and contextual understanding that working within an IDE provides.

This article outlines the tools we tested, their potential, and the lessons we learned about using Generative AI to create functional front-end designs.

The context

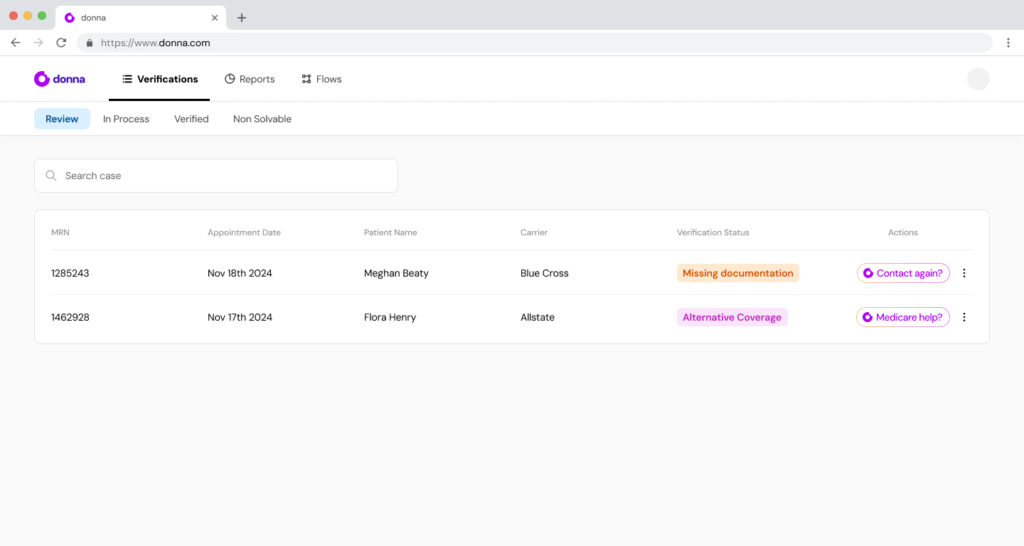

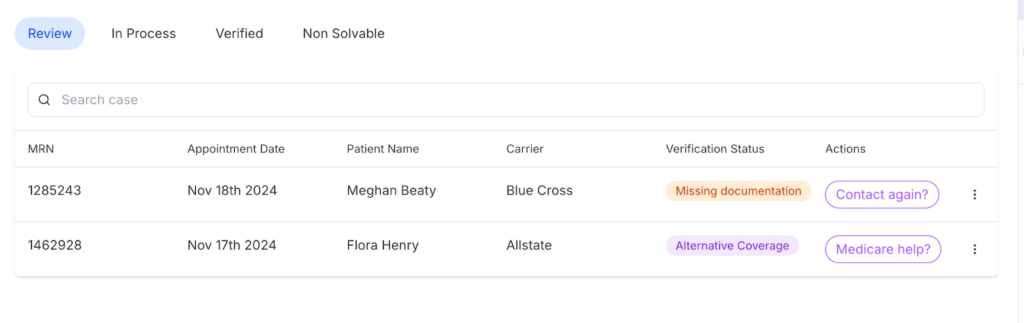

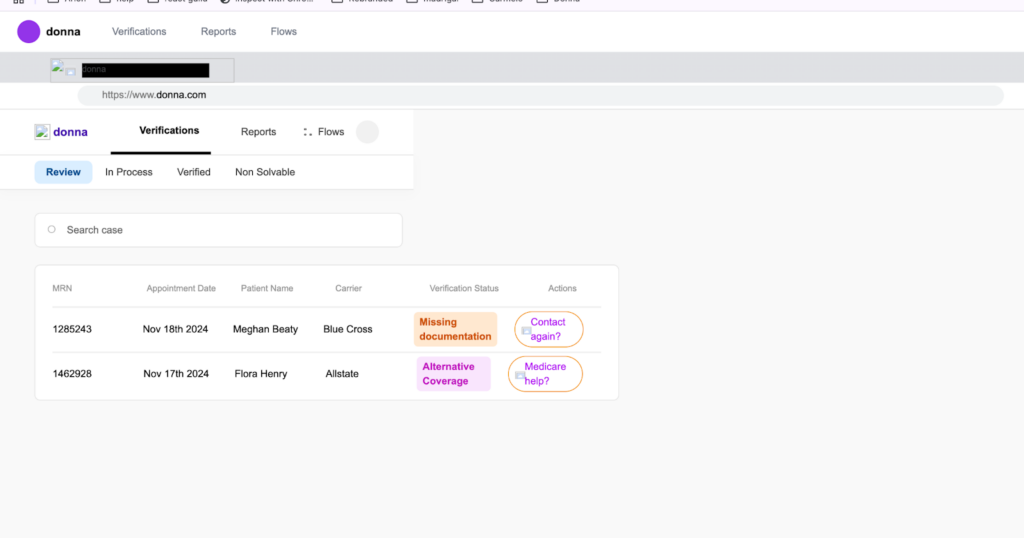

Our project began with a specific challenge: designing a user interface where a clinic staff member could audit and interact with actions taken by an Eligibility Verification LLM-based agent. After testing this interface with users, we refined the design into polished, customized Figma screens. These screens became the perfect candidate to explore whether AI tools could accelerate front-end development.

The core question was clear:

Can AI tools generate production-ready front-end code directly from Figma designs while maintaining fidelity, usability, and responsiveness?

Alongside this, we asked additional questions: Is the return on effort sufficient to justify using these tools? Which scenarios align best with their capabilities? And when should developers revert to traditional methods?

The selected tools

We explored four primary approaches to answer this question:

- V0: A web-based tool integrated with Vercel, using Next.js, TailwindCSS, and Shadcn as the default stack.

- Bolt: A sandbox environment for AI-generated full-stack web apps, defaulting to React and TailwindCSS.

- Cursor: A code-oriented AI tool based on VS Code, offering suggestions, autocompletion, and direct design-to-code capabilities.

- Figma Plugins: extensions to Figma designed to export designs into React code.

While Figma plugins were considered, they were quickly ruled out. The lack of contextual understanding and flexibility made it clear that working directly within an IDE offered better results and greater control over the development process.

How do these tools stack up when applied to real-world, nuanced design challenges in healthcare?

The First Try: Early Testing Notes

We began by running a quick test to evaluate the immediate potential of each tool. The initial approach was simple: input the Figma screens into the tools and compare the generated outputs against four key criteria:

Fidelity to Design: Did the resulting code visually match the original Figma prototype?

Code Quality: Was the generated code clean, modular, and easy for developers to work with?

Responsiveness: Could the tools handle adaptive layouts for various devices?

Time Savings: Did the tools genuinely speed up the process compared to manual coding?

Here’s what we found:

V0 delivered surprisingly accurate results for static components. However, dynamic elements like dropdowns or animations often required manual intervention. While the code quality was clean, it lacked the nuance needed for complex workflows.

Bolt offered a more in depth look at the code and manipulation, but struggled with project configuration without manual intervention. This resulted in a series of errors and ultimately in an interface that required significant refactoring to align with our project’s requirements.

Cursor showed potential in a different way: its integration with VS Code allowed us to guide the AI in real-time, making it a great tool for iterative development. Still, it didn’t excel at generating entire layouts directly from designs, but we could see some potential.

Figma Plugins turned out to be the least effective. They promised to turn the design into an HTML file, but the code structure was awful, it couldn’t identify some elements of others (ex: it only used divs for every element) and the result ended up being undesirable. Additionally they lacked the contextual understanding to address responsive design or integrate with the larger development workflow effectively.

This initial test helped us understand the limitations and strengths of each tool, setting the stage for deeper experimentation.

Stay tuned for part two of this series, where we’ll dive deeper into each tool, share specific results, and discuss the conclusions we drew from this experiment.