The integration of LLM-Based AI agents into healthcare has the potential to revolutionize workflows and improve patient outcomes. However, these opportunities come with a critical challenge: ensuring compliance with strict regulations like HIPAA, while also safeguarding patient trust. This is especially important in a context where ChatGPT has become the de facto API for building AI solutions. While it’s among the most powerful tools available, integrating it in a compliant way can be challenging.

What does it take to ensure AI agents respect sensitive data while driving innovation in healthcare? Below, we explore two key approaches to building compliant agents and reflect on the trade-offs each brings.

Approach #1: Compliance by Design

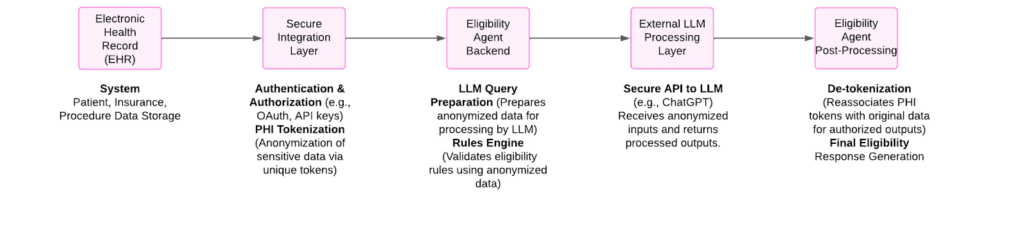

Can we design systems that empower AI while safeguarding sensitive information? One way to address this is by building compliant architectures that integrate directly with existing healthcare systems. Our development of an Eligibility Agent serves as a proper example of this approach. Our LLM-Based Agent connects to an EHR system to access patient, insurance, and procedure data. But it doesn’t store everything. By tokenizing Protected health information (PHI), we anonymize sensitive data, ensuring it remains secure even when processed by external tools like ChatGPT.

In our project, we integrated voice-enabled AI agents to handle tasks like contacting patients or insurance providers for information verification. While this makes our agent more useful, it also introduced challenges related to the secure handling of sensitive information during live interactions, where de-tokenization was necessary.

To implement voice-enabled AI agents in a manner compliant with privacy standards like HIPAA, we adhered to the following steps:

- Secure Voice Interface Integration: We incorporated a voice interface capable of managing live interactions securely, ensuring that all voice data is transmitted and processed in compliance with applicable regulations.

- Secure De-Tokenization Mechanisms: We established secure methods for de-tokenization when sensitive data needed to be shared in real-time, ensuring that the original data could only be retrieved through authorized and secure processes. This included:

- Session-specific encryption keys to decrypt tokens securely during the interaction.

- Identity verification for the recipient before de-tokenizing and sharing any sensitive data.

- Ensuring de-tokenization occurs only within a secure, isolated environment with restricted access.

- De-tokenization events logging for compliance and auditing purposes.

- Employ fail-safes to abort de-tokenization if any anomalies or breaches are detected during the session.

- Adherence to Privacy Standards: Our system was designed to comply with privacy standards such as HIPAA for voice-based communications, incorporating necessary safeguards to protect sensitive information.

- Continuous Monitoring and Auditing: We established ongoing monitoring and logging of voice interactions to ensure security and maintain auditability, allowing for prompt detection and response to any issues.

This approach shows that innovation and compliance aren’t mutually exclusive. By enabling integration with external tools while safeguarding sensitive data, we open the door to impactful AI solutions that work within strict security standards. It demonstrates how careful planning and practical problem-solving can push healthcare technology forward, allowing organizations to adopt new capabilities responsibly and with confidence.

Approach #2: Keeping It All in a Compliant Environment

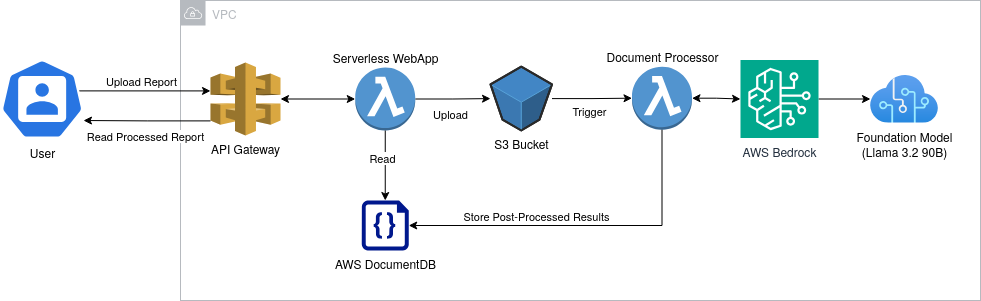

Another approach to ensuring compliance is to keep all operations within a controlled, secure environment. In one project, we worked with a customer who had an AI component designed to recognize and process lab reports. Since these reports contained Protected Health Information (PHI), sending sensitive data to external services for processing was not an option due to HIPAA compliance requirements.

To address this challenge, we leveraged AWS Bedrock to deploy Llama Vision, an open-source foundational model from Meta, directly within the customer’s existing AWS environment. Since the system was already integrated with AWS, it was crucial to design a solution that worked seamlessly within this infrastructure. This approach allowed us to utilize the capabilities of advanced AI while maintaining full control over sensitive data. This ensured compliance with regulatory standards and protected patient privacy.

This solution also provided the customer with the flexibility to refine the AI model for their specific needs, such as improving accuracy in report recognition. It highlights how controlled environments can be an effective choice for organizations handling sensitive information, offering both security and adaptability while minimizing reliance on third-party systems.

The trade-offs of this approach are clear: while it offers unparalleled control and compliance, it requires internal technical expertise. For organizations with strict privacy requirements, however, this approach provides the peace of mind that data never leaves their secure environment.

Beware of the Third-Party Tools out there

We evaluated third-party tools but found them unsuitable for real-world applications due to the complexities of adapting them while ensuring compliance. While third-party tools can promise seamless compliance solutions, they come with inherent risks. Entrusting sensitive healthcare data to intermediaries raises concerns about data handling and privacy. For this reason, we opted to develop in-house solutions, giving us full control over compliance and reducing potential vulnerabilities.

The Future of Compliant AI Agents

As healthcare continues to transform through AI, understanding the alternatives for implementing compliant agents is crucial. Whether through compliant architectures, controlled environments, or other custom solutions, choosing the right approach depends on the specific use case and organizational needs.

Also, we like to remind ourselves that compliance should not be seen as a barrier to innovation but as a foundation for building trust and driving adoption in healthcare. As product shapers, we must consider: How can we ensure compliance becomes an enabler of innovation rather than a constraint?

At Arionkoder, we’re committed to helping healthcare organizations navigate this complex landscape. Our Reshape Health studio specializes in crafting compliant, innovative AI solutions tailored to your needs. Let’s build the future of healthcare together! Reach out to us at [email protected]