Most UX and AI discussions focus on making AI outputs explainable and helping users understand decisions. While this is crucial, there is another, often overlooked, opportunity: AI improving the experience itself through Adaptive UIs.

If you’ve been following our ideas, you might remember we published some weeks ago an exploration on how AI can rethink settings and notifications to create a more fluid, adaptive experience (Reimagining Settings Interfaces). That was just the start. These principles can reshape how we design and interact with digital products. Does that sound too big to be real? Just wait and see.

What are Adaptive UIs?

Adaptive UIs (AUIs) are interfaces that dynamically adjust to particular needs and behaviors. Instead of forcing customers into rigid structures, they can be easily reshaped to provide more relevant, streamlined, and personalized interactions. The result? A single, more malleable UI that flexes to accommodate different use cases, boosting engagement and usability.

Think of AUIs as a mix between feature flags, personalized recommendations, and prompting. Instead of one-size-fits-all interfaces, experiences can evolve in three key ways:

- General variations: Broad UI adjustments that cater to specific user and market segments.

- Organization variations: Fine-tuned personalizations that respond to an organization’s goals, workflows, and context.

- Individual variations: Fine-tuned personalizations that respond to a user’s specific habits and needs.

This concept isn’t entirely new. Founders, developers, and designers manually tailor experiences, but scaling and maintaining them is difficult. AUIs are based on automating this, making UI adaptation more seamless and widespread.

How can UIs adapt?

We spent a while thinking of some of the key components that can be adaptable to create a better user experience. Adaptive UIs can evolve in two fundamental ways: adjusting content and modifying interaction patterns.

Content Adaptation

- Summarization: Condensing information based on user preferences and past behavior.

- Notifications: Adjusting content, frequency, and delivery channels based on user context. Also, combining this with automated triaging can greatly favor the signal-to-noise ratio.

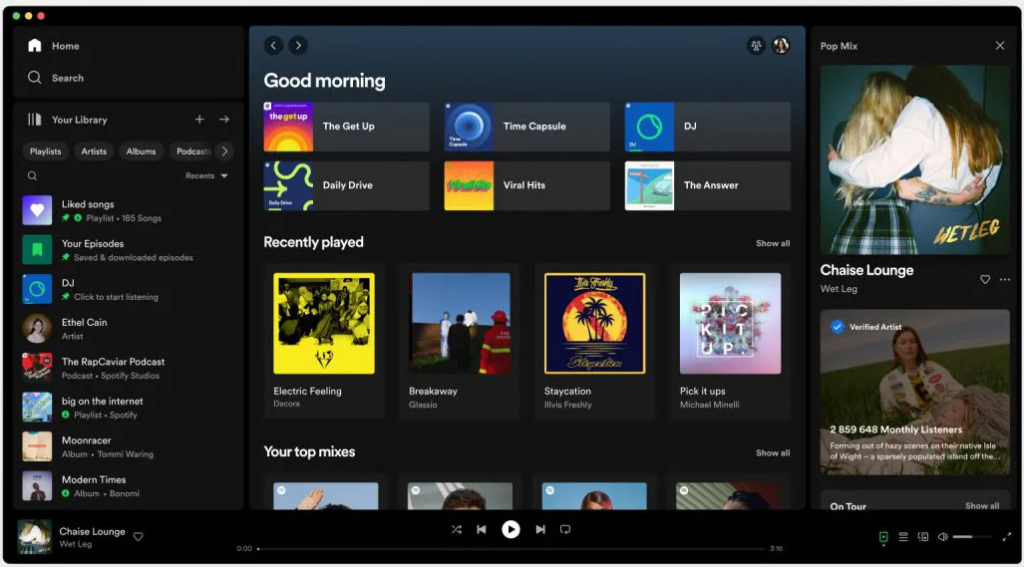

- Dashboard customization: Prioritizing relevant data points automatically, and adding modifications under user prompts.

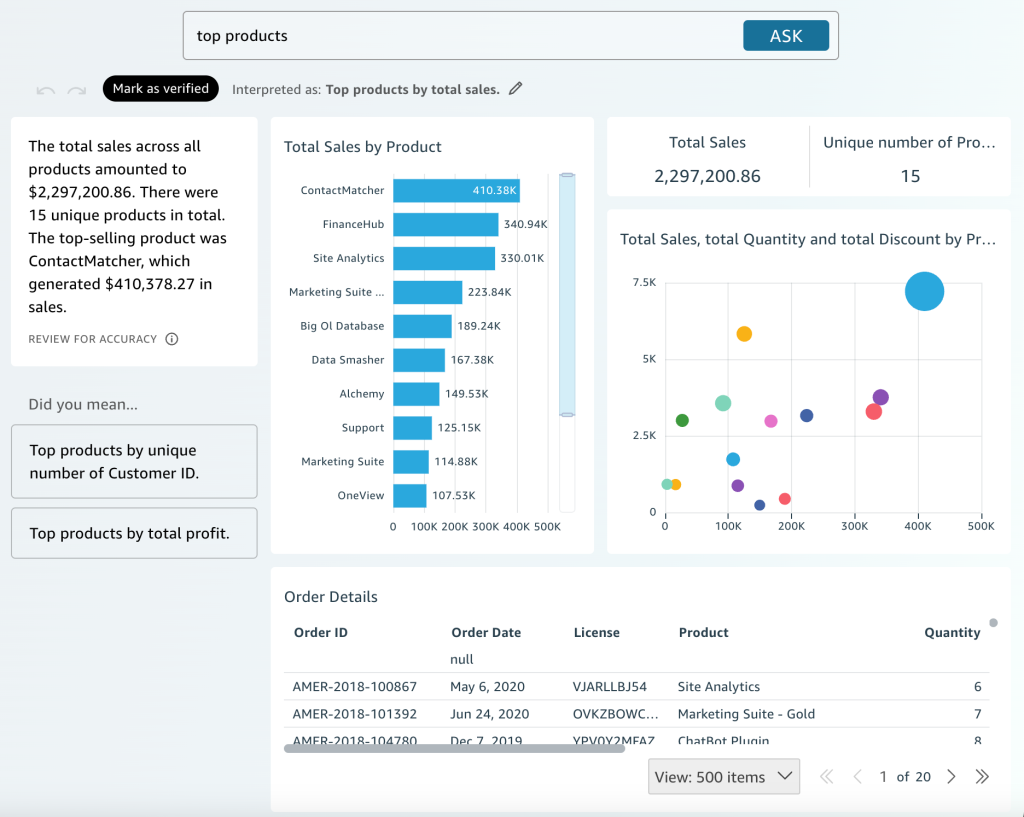

- Reports and data visualizations: Dynamically adjusting timespans, granularity, and key insights. Here, explainable AI can play a great role by informing users about the constraints of the available data to them.

- Suggested actions: Highlighting next steps based on common user behaviors.

Interaction Adaptation

- Navigation and layout: Changing menus, filters, table columns, and page structures to match better user workflows, organization workflows, and market segments.

- Action prioritization: Emphasizing commonly used functions while de-emphasizing less relevant ones.

- Flexible workflows: Allowing users to bypass or reorder steps based on historical actions can make system processes more efficient and natural.

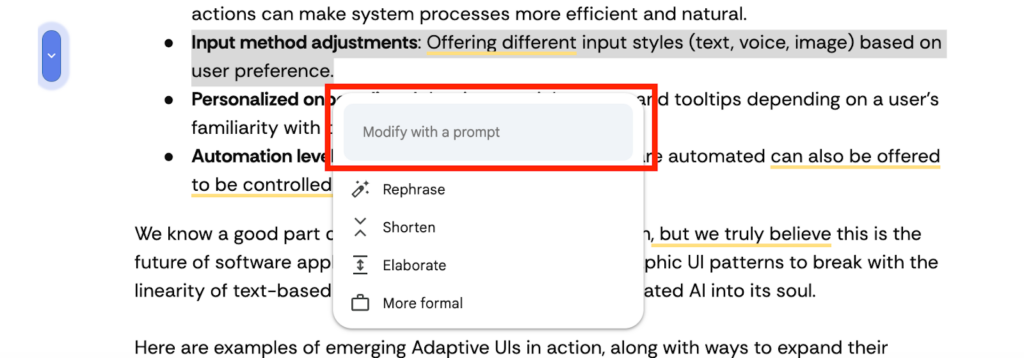

- Input method adjustments: Offering different input styles (text, voice, image) based on user preference.

- Personalized onboarding: Adapting tutorial tooltips depending on a user’s familiarity with the system.

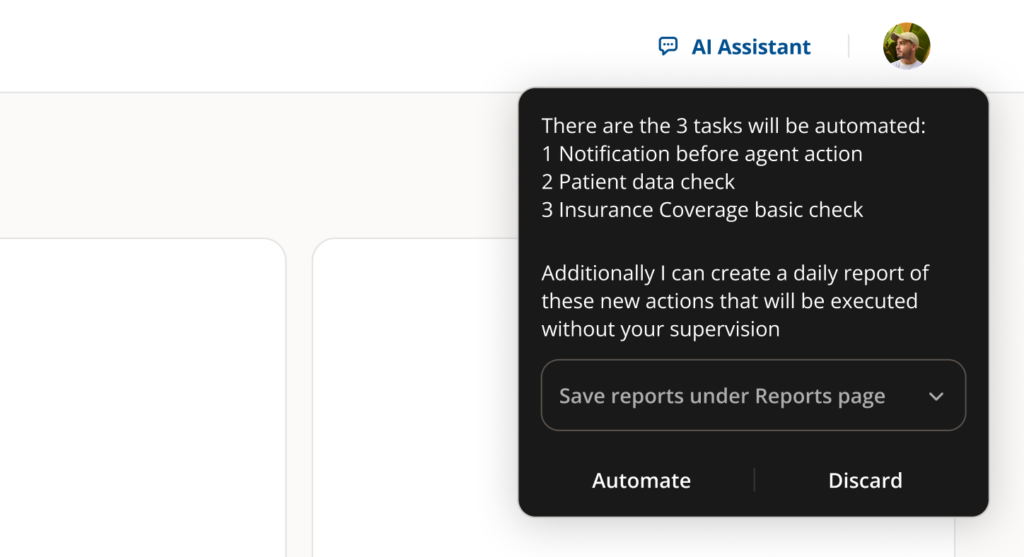

- Automation level: The level to which the processes are automated can also be exposed to be controlled by the user.

We know a good part of this still sounds like science fiction, but we truly believe this is the future of software applications: a hybrid that still uses Graphic UI patterns to break with the linearity of text-based prompts while incorporating Generated AI into its soul.

Here are examples of emerging Adaptive UIs in action, along with some of our internal explorations:

The Challenges of Adaptive UIs

Building Adaptive UIs isn’t just a design challenge; it demands a rethinking of how we architect digital products. Traditionally, interfaces are designed with fixed rules and structures, but AUIs require systems that can dynamically adjust based on context, user behavior, and AI-driven insights.

One of the biggest shifts is reducing hardwired business rules. Instead of rigid workflows, adaptation must be an interactive process where AI, users, and product teams collaboratively fine-tune experiences. This means exposing more UI elements as configurable parameters rather than fixed decisions.

At a technical level, AI-driven adaptation requires UI components to be more abstracted and modular. Instead of building interfaces with predefined structures, we need systems where an AI can recognize components, understand their functions, and modify them intelligently. This demands a more flexible design system and a way for AI models to interact with it safely.

Beyond technical feasibility, there is an open question about customization sharing. Should adaptations remain personal, or can they be shared across teams or even entire organizations? How do we balance personalization with maintaining a consistent experience?

At Arionkoder we are actively exploring these challenges. What are the architectural changes required? What technologies best support adaptive interfaces? In future explorations, we will dive deeper into these questions, sharing insights from our technical experiments and real-world applications.

Where Would You Start?

As with AI applications in general, it is easy to get too ambitious with the technical possibilities and lose sight of user needs. Despite the huge possibilities Adaptive UIs brings to the table, the best way forward is to start with small, high-impact adaptations:

- Identify key friction points where adaptation would benefit primary users.

- Extend those ideas to secondary users, ensuring the system remains flexible.

- Iterate, abstract, and refine the design over time by adapting the UI to real-world usage instead of theoretical assumptions.

Where would you start making your UI more adaptive?