Exploring regulatory approaches in the world of Artificial Intelligence: From self-regulation to comprehensive legislation, what is the way forward?

As we dive into a world with AI, it’s clear that sooner or later regulations will emerge. Instead of recounting past events, my aim today is to paint a current picture of the diverse approaches and hurdles in regulating AI. It’s a fascinating yet complex landscape, just like encountering a friendly yet enigmatic three-headed creature.

Let’s start with a little context. It wasn’t until a couple of years ago that it became mainstream to talk about AI, but we have known and imagined it for several decades now, like we did with Hal9000 in 2001: A Space Odyssey. I can even go further back to 1943 when the concept of “Neural Network” was first established by Warren McCullough and Walter Pitts in their “A Logical Calculus of Ideas Immanent in Nervous Activity”, or to 1956 when John McCarthy mentioned the term “Artificial Intelligence” for the first time.

Technologies usually germinate in contexts of need: wars, shortages, famines, pandemics and more. But they can only express their full potential in the right environment. This is why the Internet had to wait for cheaper hardware and faster connections to become omnipresent; or how cellular telephony had to wait for faster data transfer protocols to put the world in our hands, just to name a few examples.

Everything in history happens with a certain degree of “predictability” and -as expected- the regulations are always the last to arrive at the party. Although they are usually a less exciting component of development, they are necessary for each technology. Just like Aunt May quoting the very old adage from the 1st century BC: “With great power comes great responsibility”, it’s true that each technology can be as good or as bad as the hands that decide to use it.

Something along those lines is happening with AI. Maybe it all comes down to the ethical dilemma it potentially represents. Or maybe it’s about its geopolitical relevance, or even something else. But the fact remains that there are already different fronts and stances in regulatory frameworks that try to “govern” the scope of AI and its future implementations. Namely, three approaches stand out: the United States, Europe and China.

The United States and AI regulation

In the fast-paced world of AI innovation, the U.S. takes the lead as the main producer of AI tools. But despite that, there’s no all-encompassing federal regulation for AI just yet. Recent public debate has focused on the impact of generative AIs, especially after the introduction of OpenAI and ChatGPT. These technologies raise questions about ethics, privacy,and the control of machine-generated information.

Tech titans themselves have stepped up with proposed solutions to navigate AI challenges, with some presenting these proposals to Congress. This demonstrates the industry’s proactive interest: from a market that regulates itself to a market that helps in its own self-regulation but includes other factors. The DAIR (Distributed AI Research) Institute, for instance, serves as a hub for managing these concerns through ethically sustainable AI implementation that benefits the communities it reaches.

It is in this context that the United States government has sought the commitment of technology companies for the development of AI products. These agreements may have implications in terms of security, privacy, and fairness in the use of AI. Specifically, they address making AI accessible for education and non-profit activities, warding off deepfakes, and even putting the brakes with effective security measures on AI systems controlling critical infrastructure (cue Skynet vibes).

Europe and its particular approach

In Europe, much like in the United States, the landscape of AI legislation is a work in progress. However, there’s a buzz of anticipation surrounding the European Union’s advancements in AI regulation, particularly with the impending enactment of the EU AI Law (AI Act), developed by the European Commission and slated for implementation this year.

The EU’s regulatory efforts are expected to become the global standard, just like the General Data Protection Regulation (GDPR) in 2018. The AI Act is expected to catalyze a similar protective shift, offering multinational organizations a framework to align their AI practices with EU laws.

A significant difference from the US regulation is the AI Act’s adoption of a risk-based regulatory strategy. Under this framework, AI systems are categorized into four risk levels – unacceptable, high, limited, and minimal risk – each carrying corresponding obligations for providers and users.

The AI Act doesn’t mince words when it comes to prohibitions and restrictions. It prohibits AI practices that pose an unacceptable risk to human safety, such as the use of subliminal or manipulative techniques resulting in physical or psychological harm. Additionally, it lays down strict restrictions on the use of remote biometric identification and addresses other high-risk domains, including security, health, and the protection of individuals’ fundamental rights.

China’s recipe for regulating AI

In China, AI regulations take a fascinating twist that reflects the country’s unique perspective on governance. Leading the charge is the Chinese Cyberspace Administration (CAC), serving as the central regulatory body for all things AI. With its mission to oversee AI development and usage, the CAC underscores China’s proactive approach to keeping a close eye on AI-related activities.

China has published three sectoral regulations since 2021, demonstrating a targeted approach to addressing different aspects of AI. These regulations focus on areas such as recommendation algorithms, Internet information synthesis, and generative artificial intelligence services. Chinese AI regulations include specific prohibitions, such as a ban on fake news and deep fake videos, as well as requirements to label AI-generated content. This suggests a focus on protecting users and ensuring accuracy and transparency in the use of AI.

Tackling the challenge of regulating AI in China involves a layered approach, including specific regulations for different sectors, clear regulatory roles, and even public consultation in the rule-making process. This step-by-step strategy reflects the Chinese government’s commitment to overseeing AI development responsibly.

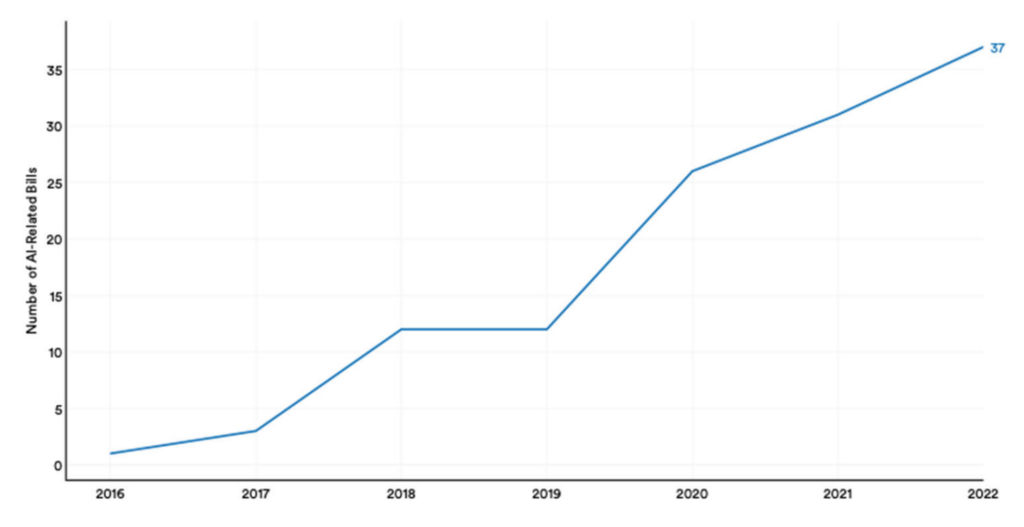

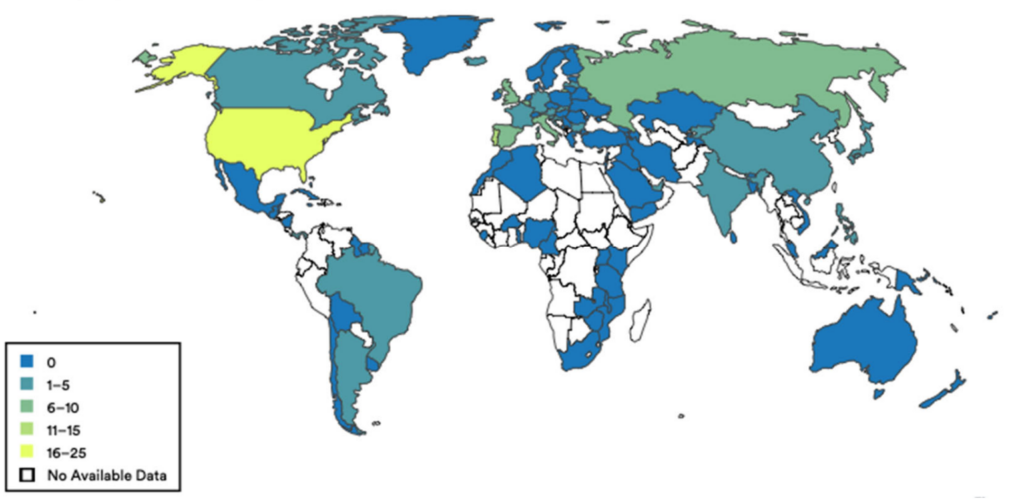

While the USA, Europe, and China serve as prominent examples, it’s important to note that they’re not the sole contributors to shaping AI regulatory frameworks. Numerous countries are actively engaged in this endeavor, as shown below:

Source: HAI Stanford University (2023)

The upcoming “battle of models” deserves a separate chapter and has to do with the open or controlled use of AI; an OpenAI vs ClosedAI model that reminds us of the days of Netscape vs Explorer, but that is a conversation that it is not necessary to delve into today because it will surely make for several more articles.

Conclusion

There’s been talk of a consensus brewing around the European proposal for AI regulation, but let’s take a step back and ponder: what’s the purpose of AI? What role do we want AI to play? The push for AI regulation stems from recognizing its immense power as a tool – whether in commerce, education, the military, or even geopolitics.

No matter the angle you approach it from, one thing’s clear: today there’s a growing concern for safeguarding personal data, protecting copyrights, and cracking down on the malicious use of AI for things like tarnishing reputations, running smear campaigns, or creating those infamous deep fakes. But here’s the kicker: when we say “today,” we’re acknowledging that AI is just one piece of a much larger puzzle. And on this chessboard of power dynamics, there are players with their own agendas.

It’s a reminder that the rules and regulations surrounding AI aren’t set in stone – they can be reshaped to serve the interests of those who hold the reins. So, as we navigate the ever-evolving landscape of AI regulation, let’s keep our eyes on the bigger picture and the players at the table.

If you want to explore these topics in-depth, don’t hesitate to reach out to us at [email protected]. Together, we can create safe AI.