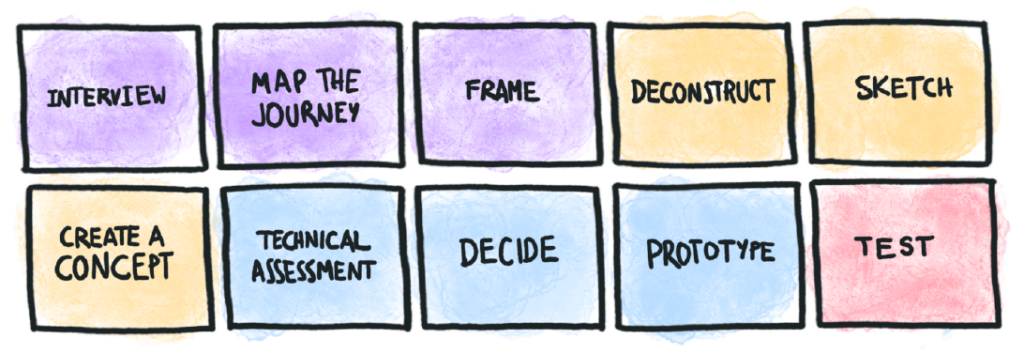

Since I joined Arionkoder in 2021, we have been working on products that needed different types of AI features. As I mentioned in a previous talk, we started by creating wrapper apps that connect to AI Models, we got frustrated about how siloed everything was, and then we decided to build bridges with the experts. We created a series of activities and Miro boards to put domain experts, ML experts, and design experts to collaborate on Model definitions. We tried different formats and structures, and we struggled for months trying to find a good solution to define and design user-centered AI Products. We continued tweaking and refining these tools, and in this article, I’m proud now to present our Introductory AI Design Sprint series of workshops, crafted with a strong passion for user-centered approaches and for exploring AI as a new design material.

Why we chose the Design Sprint format

The Design Sprint format is one that our team manages expertly, and that several of our customers are already familiar with. We have been hearing praise for how effective it is as a tool for rapid innovation and iterative product development, so I just want to begin by highlighting what is valuable about it:

- Framing time helps us to prototype and test ideas fast, contributing to a learn-by-doing mindset where we don’t cling to discussing decisions for a long time. The clear structure and quick wins generate momentum and enthusiasm, which can carry over into further development phases.

- It fosters an environment of innovation. The collaborative activities and the structured nature of the sprint—moving from defining problems to testing ideas—help uncover new and original solutions to complex challenges. The inclusion of multiple perspectives here is key to us, as it leads to more holistic solutions.

- It helps with risk reduction: Testing ideas with prototypes before committing to full-scale development helps reduce the risk of building something that users don’t need or want. This can save organizations time and resources, and potentially avoid costly failures.

There is something else that we find valuable: by going through it with our ML Experts, we discovered that this understand -> build -> test -> iterate cycle is the same one they use in ML engineering, to define and refine the models they create. It was a surprise to us, but we think that’s the main reason they quickly understood both our way of working and the value of the Design Sprint: for them, it is an even more lo-fi format to make progress. I might be biased here from my particular Arionkoder experience, and I’m interested in hearing about the experiences of others.

Setup details

Just for the sake of making the obvious more obvious: you can’t skip the presence of ML Experts throughout the whole process. It’s key for collaboration and for deepening everyone’s understanding of how ML products work. If they are part of the project but they don’t engage in this phase, you are setting yourself up for failure.

You will also need a facilitator/coordinator who has experience running Design Sprints or similar Design Thinking activities.

Another important thing to notice is that AI is a new material that designers and stakeholders are still creating their intuition around. You then need to do more field-leveling by explaining how it works, what are its capabilities, and helping the team decompose ideas into viable products. This might depend on the level of expertise of the team, but we have found that introducing how ML works as brief pieces of training during the process makes it more engaging for everyone.

Lastly, this is an Introductory workshop in the sense that it’s designed to introduce teams to creating and designing AI Products, highlighting how working with this new material changes the current ways of working, and providing training in a learn-by-doing approach.

Adding new perspectives plus new knowledge, and making an introductory sprint implies extending the time from the typical 1-week Design Sprint. But as we mentioned before, time framing helps maintain the experimental and focused spirit of the Design Sprint. For this reason, we recommend aiming to execute the AI Design Sprint over 2 weeks. This could change based on prototyping preference, as you will learn later.

Let’s get into the phases.

First Phase: Understanding

Recommended length: 3 sessions + User interviews + asynchronous debrief time for each session.

In this phase, we recommend starting with user and expert interviews. Building empathy is important for the whole team, and hearing directly from people involved in the challenge is key to this. Nothing beats starting from understanding. We know finding the experts and scheduling is hard, but it is such a shortcut to learn more about the problem that you don’t want to miss it.

We then move into a collaborative Journey Mapping activity, where we materialize the workflows into virtual sticky notes and arrows to capture critical paths and learn about the process details. As the Journey Mapping is focused on workflows, you will find how it naturally prepares you for the next thing you want to do: finding opportunities in those workflows that can be improved with AI automation and augmentation.

That’s exactly what you would do during the third activity: review the Journey Map again, annotating opportunities for AI Augmentation and AI Automation, together with related data needs. We consider this part of the Understanding phase, as it’s used to create alignment around how to frame the challenge: You are still thinking about how to frame it: What’s crucial in this user’s journey? Does it need AI or can it be solved through Heuristics? What’s more important from these workflows to focus on? How is it all interconnected? What is worth future explorations?

The final activity we do in the Understanding phase is to create a problem statement, which allows us to move to the ideation phase with a point of view that summarizes the shared understanding we created.

Details to consider:

- You can alternate the order between Interviews and Journey Mapping, depending on the initial status of the product. If stakeholders already have a Journey Map, or you’re redesigning a process, you might want to start reviewing what the team already has first and then execute more User and Expert interviews to explore and validate.

- Depending on the team, there could be a need for previous training around Automation and Augmentation, how these affect user experiences in different ways, and what situations make more sense for each one. We cover this with a 10-slide training that takes around 15’.

Second phase: Ideation

Recommended length: 3 sessions + asynchronous activities.

We start this phase by integrating part of what we learned from Carnegie Mellon HCII’s AI Design Kit: introducing AI capabilities and letting users play with them as cards. After introducing them briefly, we review the detected opportunities in the journey map and we start decomposing the relevant ones for the challenge as chained AI Capabilities.

This step is crucial because it provides a better understanding to everyone:

- AI Experts can easily translate these decomposed capabilities into what’s needed to be built, giving them a better idea of the implied effort

- Non-AI Experts can better understand the complexities behind the opportunities: by splitting each opportunity into a composition of combined capabilities, they will deepen their knowledge and gain skills in how to communicate AI feature needs in detail.

For the next session, we jump into the typical visual sketching activities we do following the Design Sprint process of Note-taking -> Sketching -> Crazy 8s. Despite having a Graphical UI or not, visual sketching can help you represent ideas in context, and we were surprised to learn how ML Experts enjoyed being able to capture this extra context.

But there is a small twist: if you’re familiarized with the Design Sprint process you might have noticed that we are skipping the Lightning Demos. This is because asking the team to find related UIs and examples seems too hard yet. Good UI patterns for Human-AI interaction are still scarce, they are often immature ideas too centered in prompting. Instead, we briefly present content from the People+AI Research Chapters that present what needs to be communicated and a collection of patterns related to this.

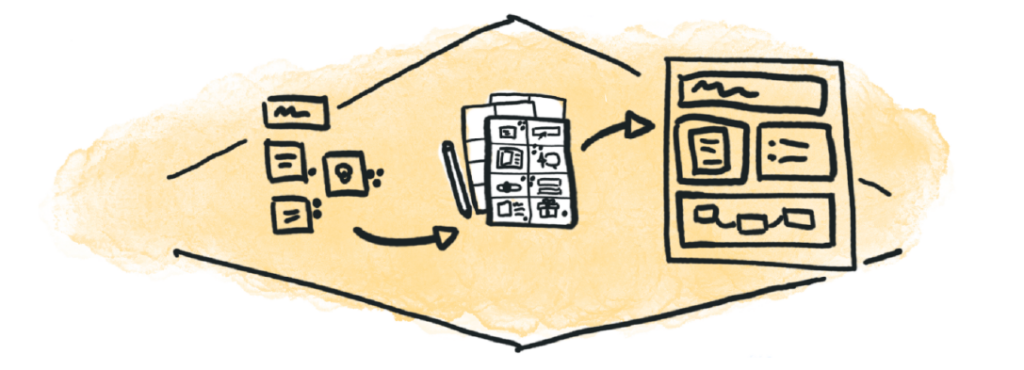

For the Conceptualizing activity, we have come up with our own template. It’s based on presenting an AI concept, so it moves from the common visual representation you will see in a Design Sprint. With our template, we put the focus on how the why, the capabilities, the data needed, and the output align. As a second level of detail, we ask for a visual representation of the logical order and the kind of optimization expected between precision and recall.

Once we get to this point, the team performs a Design Critique activity, to add questions and comments to the ideas presented. We then do a brief async Technical Assessment of each of the concepts to provide more information on data needs and feasibility.

After this information is gathered, we bring our problem statement again to vote and select the ideas to prototype and test.

Details to consider:

- We initially thought Visual Sketching could be an optional part of the process, but even in cases where there weren’t a lot of Graphical User Interface elements to consider, it proved to be useful. We are now executing it every time.

- For this phase, we have materials prepared to train the team on different aspects of the Model definition. We present them based on the level of knowledge of the team, and time availability.

Third phase: Prototyping

Recommended length: 2 brief sessions for alignment + asynchronous activities.

The prototyping stage depends a lot on customer preference. The minimal version is a Figma prototype, Wizard of Oz techniques, and a well-coordinated script. This is enough to learn how users will interact with the ML functionality and how it adapts to their contexts, but it won’t inform you anything about the AI outputs and performance.

In other cases, there can be a combination of Figma with a Proof of Concept Model, or some stitching activities to incorporate existing ML Models into the prototyped experience. This extends the time needed to execute it, but it can provide more details about how the ML model performs.

We recommend having at least two sessions for alignment. During the first one, you will decide what is going to be tested and set an initial order of moments, using the Storyboard canvas for this. The second session will be useful for checking the prototype and the script together.

Details to consider:

- In the case the team you’re working with is up to building something beyond Figma prototypes, the facilitator still needs to be present and make sure they are following the spirit of building fast and only enough to learn. Remember that some teams won’t have a learn-fast mindset and will need guidance.

- Several tools allow our team to do a Proof of Concept ML Model, and it depends on your context. While it is not part of this workshop, you can ask the Technical team to research tools to prototype ML Models and how they play out with the stack they use or already know.

Fourth phase: Testing

Recommended length: 5 tests with potential users + debrief session.

Once you get to this stage, you will feel that you have learned a lot. But testing your prototype against users will double that. It might be hard to schedule, but it’s so informative and efficient in time usage that we consider it the most important step of the whole process.

For this step, there is nothing that differs from the normal Design Sprint in our experience. Depending on what you’re looking to validate, you might want to focus on different aspects. Some questions that can guide you are: Do users recognize when they are interacting with an ML-based feature? Does the UI do a good job of managing their expectations around it? How clear and actionable are the ML outcomes presented? What are users expecting in terms of precision?

Try it now

If you’ve got to this point, I’m pretty sure you are interested enough to give it a try. We want more teams to try it, so feel free to do it, and if you need more guidance or just want to comment on how it went, just write to me.

Some final words

This series of workshops was designed mostly by me, but it wouldn’t be what it is without the great help I got from Vico de Santiago, Nacho Orlando, Alejo Hernández, Juan Vildosola, and JP Vargas, all from Arionkoder. Also, through the process, I have been interacting with an AI Design community that I help to coordinate. Learn more at: https://medium.com/latam-ai-ux.

I’ll be sharing part of what we learned in our course: Diseñando IAs con UX en mente (Spanish, starting May 8)

Resources and main inspirations

During our whole process of getting to these workshops, there were also important influences and resources. The Design Sprint from Google is at the base of it, but also People+AI Research from Google, and Carnegie Mellon’s AI Design Kit were strong references.