The Dark Side of Cutting Corners

There are 4 levels of risk according to the EU AI act: minimal, limited, high and unacceptable (Consillium, 2025). Minimal level refers to the majority of AI systems which do not pose a threat on users and therefore will not be regulated, same as it was before EU AI act basically. Video games usually fall into this category. Limited level refers to AI systems which can have some risks associated with some steps in the process, thus a transparency measure is required, raising awareness of the aforementioned risks. ChatGPT belongs to this category. High level refers to AI systems which can pose a threat to users if not used properly, in this case strict requirements and obligations are needed to gain access to the EU. Autonomous driving is a clear example of this category. Finally, the unacceptable level within AI systems is basically everything that can put people’s lives at risk, for example social scoring and predictive policing.

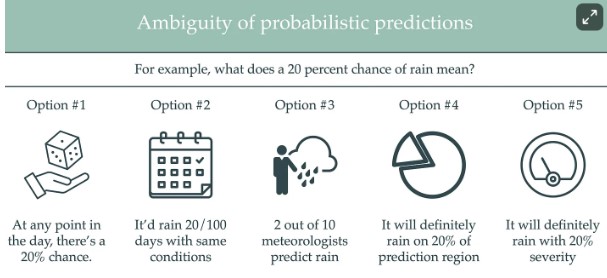

After some consideration we could categorize an AI companion as a high risk AI solution under the EU AI act. There are some misuses for an AI companion which range between manipulative upsells depending on emotional state, unsafe medical advice or even addictive loops within the user experience. All of the potential risk scenarios are connected to the concept of “risk score” (Thought on AI Policy, 2023), this concept tries to explain probabilities related to a certain scenario. The main issue with this is that it can be an ambiguous concept to grasp, as there could be different interpretations for probabilities that are not clear to everyone (see image below for an example)

(Source: Thought on AI Policy, 2023)

One of the potential solutions to this problem is to focus on outcome instead of communication of AI’s risk score, optimizing joint decisions made by AI + humans collaboratively (Thought on AI Policy, 2023). Nevertheless, it can be an insufficient solution in some cases as humans would need to fully understand the whole process in which an AI system collaborates, therefore learning about AI’s impact. There is an alternative though, if humans are able to fully interpret and understand AI systems it could be possible to build trust and actually a collaboration between AI and humans.

Model cards are an example of how technical documentation is an important part of how an AI system can become transparent as it showcases model data and training, risk identification and mitigation, and societal impacts, among other key aspects (e.g. ChatGPTs model card:GPT-4o System Card, 2024). Unfortunately, the technical level a person needs to grasp all information displayed in model cards is high, as it requires at least a basic label of coding and math. Even though model cards are a good approach to transparency they are still not explainable enough for a common user that interacts with AI systems.

Coming back to the case of AI companions, users are used to interacting with chatbots through a chat window without a lot of information. They are not shown a model card or a user manual to fully understand how answers are made. As explained above, this can create misuse scenarios, because it exploits the fact that users don’t know anything about AI systems’ inner workings, how it uses conversation data or any privacy matter. Just take a look at Meta’s AI companion and ChatGPT in the image below, it shows the first point of contact with a user, prompting them to ask or start a conversation straight away without much other explanation.

Moreover AI companions targeting lonely individuals with potential psychological issues pose a safety risk due to the possibility of harmful conversations arising unexpectedly and the potential for unintentional negative responses. Developing AI companions requires strong ethical guidelines to build public trust. Negative experiences with chatbots or AI friends can deter future adoption. Transparency is crucial in this trust building; AI companions should provide explainable information so that users understand as much as possible how outcomes are made, which data is used as input and output, or which modules exist inside it.

Framework for Responsible AI Companions

Now let’s focus on how to actually build AI companions that fulfill the transparency promise, therefore building real trust between AI and humans. But before we do that, let’s define clearly what transparency and explainability actually mean, as they are concepts that interchange constantly in AI literature. Finally we will align those concepts into a potential framework that ensures AI companions are built with them as core values, thus creating responsible AI companions.

Transparency is usually defined as the act of being open and honest using communication as the preferred tool (Introduction to AI Accountability & Transparency Series, 2023). We have implicitly explained this in previous chapters, however, giving a clear definition makes the framework more powerful as it doesn’t leave room for ambiguity. Transparency in AI can have 3 types: algorithmic, interaction and social (Hayes, P., van de Poel, I. & Steen, 2023). Algorithmic transparency is basically that the AI system explains logic and processes behind the algorithm. This is tied to what model cards attempt to do: detail how data is processed or how it reaches a decision. Interaction transparency is closely related to how humans interact with AI, as it provides guidance in terms of how a user can actually collaborate with AI: understanding AI capabilities and limitations. Social transparency leans towards AI impacts on society as a whole.

The EU AI act provides a clear picture into what explainability is, stating that it’s the ability to explain both the technical processes of an AI system and the related human decisions (Ethical Guidelines for Trustworthy AI, 2019). One key aspect is that it has to be understandable to the common user, this is why I believe model cards can fall short of this goal. Now you can see that a transparent AI system has to have both explainable technical processes and related human decision making. While interpretability is a related idea, we will focus on transparency and explainability by examining two frameworks: privacy by design (used in Canada) and the EU AI Act.

The privacy by design framework (Information & Privacy Commissioner Canada) has 7 key principles:

- Proactive, not reactive: It proactively seeks to avoid privacy issues instead of having reactive measures

- Privacy as default: In the design process it attempts to prioritize the maximum degree of privacy for the user, protecting their data along the way as follows:

- Purpose specification -> Communicates to use how personal information will be collected, used and retained

- Collection limitation -> Information will be collected for fair, lawful and aligned to specific purpose

- Data minimization ->

- Use, retention and disclosure limitation ->

- Privacy embedded into design: Privacy at the core of solution design, it is imperative without hampering functionality

- Full Functionality: Privacy doesn’t compete with other interests inside the solution, it is perceived as a positive sum “win-win” approach. It doesn’t chase a tradeoff between other variables and privacy, as it seeks to incorporate them all.

- E2E Security Lifecycle Protection: Data lifecycle is prioritized in privacy by design as it guides all data from the start until it’s destroyed

- Visibility & Transparency: It ensures all stakeholders that objectives and promises made by privacy by design are upheld as all information, rules, objectives is visible to users and providers, therefore creating transparency.

- It uses fair information practices, which are related to openness, accountability and compliance, concepts closely related to transparency as a whole.

- Respect for User Privacy: It’s important to build solutions with a user-centered approach. Always keeping in mind user privacy as priority and designing user-friendly options.

- It uses fair information practices such as consent, accuracy, access and compliance.

On the other hand, EU’s AI act ethical guidelines for a trustworthy AI dictate:

- Human agency and oversight: AI systems should empower informed individual choices and basic rights through robust human oversight mechanisms such as human in-the-loop (HITL: humans actively collaborate with AI and have input), human on-the-loop (HOTL: humans give AI feedback), and human in-command (HIC: humans closely supervise AI as they make decisions from human input).

- Technical Robustness and safety: To minimize and prevent unintentional harm, AI systems must be resilient, secure, safe (with fallback plans), accurate, reliable, and reproducible.

- Privacy and data governance: Beyond complete privacy and data protection, robust data governance is crucial. This includes maintaining data quality and integrity, and establishing legitimate data access protocols.

- Transparency: AI companions need transparent data, systems, business models, and traceability. Their operations and decisions must be clearly explained to stakeholders. Users should be informed they are interacting with AI and understand its capabilities and limits.

- Diversity, non-discrimination and fairness: To prevent negative outcomes like marginalization and increased prejudice, AI systems must avoid unfair bias. Promoting diversity means ensuring accessibility for everyone, including those with disabilities, and involving relevant stakeholders throughout the AI lifecycle.

- Societal and environmental well-being: AI systems must be sustainable and environmentally conscious, benefiting all of humanity, including future generations. Their design should carefully consider the environment, encompassing all living beings, and their broader social and societal consequences.

- Accountability: AI systems require responsibility and accountability mechanisms, including auditability for algorithms, data, and design, especially in critical applications. Adequate and accessible redress should also be ensured.

After analyzing both sets of frameworks, it’s possible to see similarities and some differences in its approach. As you know by now for our AI companion, which we aim to build with ethics in mind, we prioritize transparency. Several concepts like explainability and privacy have been thrown around, but at the end of the day the objective remains the same: build AI solutions that generate trust with its users and upholding the promise made.

We believe that creating black boxes as AI solutions it’s a thing of the past. It is our duty to design products that users like to use, teaching them its inner workings and main components. Being honest with our users, especially those that typically use an AI companion who can have difficult times in their lives, it’s a must and a design choice (similar to how privacy by design is set as default).

Just think about it this way, if you look for a friend in an AI companion, would you trust a friend that uses your conversation data without you knowing it? With sensitive topics that could arise in a conversation, what would you think of a friend that takes advantage of it and tries to sell you something? In conclusion, we will create a safe path for our AI companion, always having empathy for our user and using the key concepts used by both EU’s AI act ethical guidelines and privacy by design because we really care for our users as we do if they were our friends.