Introduction

The Model Context Protocol (MCP) significantly advances how AI models interact with external tools and resources. While tool integration isn’t new in the AI world, MCP’s innovation lies in its server/client architecture, which makes it programming language-agnostic. This groundbreaking approach allows developers to build servers in one language (like Python) and use them seamlessly in applications written in another language (such as JavaScript) because it has a client-server architecture. As MCP adoption grows, more developers are building and sharing servers, creating a rich ecosystem of tools that can be easily integrated into diverse AI solutions.

What is the Model Context Protocol?

MCP is a standardized communication protocol developed by Anthropic that enables AI models to interact with external tools, resources, and functions in a structured way. The protocol defines how models can request information or actions from external services and how those services should respond.

MCP addresses several challenges in AI development:

- Standardizing how AI models interact with tools

- Enabling dynamic discovery and use of capabilities

- Supporting interoperability between different programming languages

- Providing a clean architecture that separates tool implementation from usage

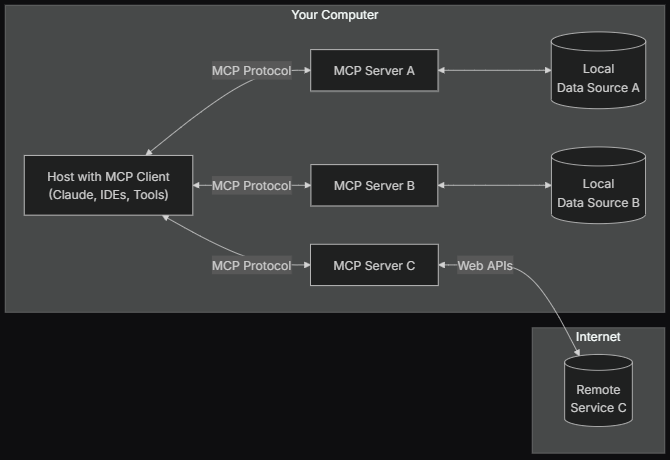

At its core, MCP operates on a client-server model where the AI application connects to MCP servers that expose various tools and resources.

The Language-Agnostic Breakthrough

The true innovation of MCP is its language-agnostic architecture. Before MCP, integrating tools with AI models often required implementations specific to the model’s language. MCP changes this paradigm entirely:

- Data scientists can build powerful analysis tools in Python

- Web developers can use these tools in JavaScript applications

- Mobile developers can access the same tools from Swift or Kotlin

- All without rewriting or reimplementing the tools

This cross-language compatibility eliminates significant development overhead and enables teams with different technical backgrounds to collaborate more effectively. Organizations can leverage their existing technical investments regardless of the language they were built in.

Implementing MCP Servers: A Practical Example with Python

Let’s examine a simple MCP server implementation that calculates BMI using Python:

from mcp.server.fastmcp import FastMCP

mcp = FastMCP("My App")

@mcp.tool()

def calculate_bmi(weight_kg: float, height_m: float) -> float:

"""Calculate BMI given weight in kg and height in meters"""

return weight_kg / (height_m**2)

@mcp.resource("json://profile/{user_name}")

def get_user_profile(user_name: str) -> str:

"""Return the weight_kg and height_m of a user"""

database = {

"samuel": {"weight_kg": 95, "height_m": 1.77},

"maria": {"weight_kg": 60, "height_m": 1.65},

}

return database[user_name]

@mcp.prompt()

def check_bmi(bmi: float, user_name: str) -> str:

"""Check the BMI based on user's name"""

prompts = {

"samuel": f"I'm an athlete, consider this when evaluating my BMI: {bmi}",

"maria": f"I'm a 70-year-old woman, consider this when evaluating my BMI: {bmi}"

}

return prompts[user_name]

if __name__ == "__main__":

mcp.run()This example demonstrates the three main components of an MCP server:

- Tools: Functions that perform specific operations (like calculating BMI)

- Resources: Data sources that can be accessed via URL-like syntax

- Prompts: Template-based generation for contextually appropriate responses

Using this server from a client application is straightforward:

import asyncio

import nest_asyncio

nest_asyncio.apply()

from repenseai.genai.mcp.server import Server

from repenseai.genai.agent import AsyncAgent

from repenseai.genai.tasks.api import AsyncTask

server = Server(

name="teste_mcp",

command='python',

args=["servers/bmi.py"]

)

async def main():

agent = AsyncAgent(

model="claude-3-5-sonnet-20241022",

model_type="chat",

server=server

)

task = AsyncTask(

user="What is my BMI? Height: {height}, Weight: {weight}",

agent=agent

)

response = await task.run({"height": "1.77", "weight": "95kg"})

print("\n"+response['response'])

asyncio.run(main())Advanced Applications with MCP

MCP enables developers to create sophisticated AI applications by combining specialized tools. For example, an agent that uses an MCP server for persistent memory:

server = Server(

name="knowledge_graph_memory",

command='docker',

args=["run", "-i", "-v", "claude-memory:/app/dist", "--rm", "mcp/memory"],

)This creates a Docker container with a volume mount for persistent storage, allowing the AI to maintain context across interactions. The architecture makes it possible to create AI systems that combine:

- Language understanding (from the AI model)

- Specialized capabilities (from MCP tools)

- Persistent memory (from MCP resources)

- Context-aware responses (from MCP prompts)

The Growing MCP Ecosystem

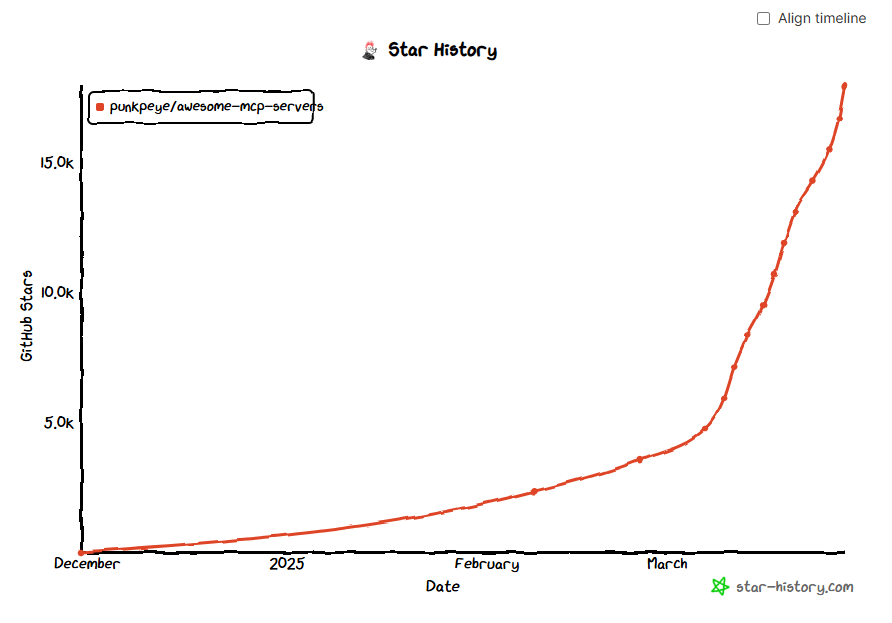

As MCP adoption increases, a vibrant ecosystem is emerging:

Developers are building and sharing MCP servers for various domains, from data analysis to API integrations to knowledge management. Major AI platforms are supporting MCP with direct integration in their services and development tools. The community is establishing best practices for tool design, error handling, security, and cross-language compatibility.

Conclusion

MCP servers represent a significant advancement in AI development, not because tool usage itself is new, but because the server/client architecture enables unprecedented interoperability between programming languages and systems. This architecture allows specialized tools to be developed in any language while making them universally accessible.

The growing adoption of MCP has created a virtuous cycle where more developers build and share servers, expanding the capabilities available to everyone. As this ecosystem continues to grow, we can expect increasingly sophisticated AI applications that leverage specialized tools from multiple domains, all working together seamlessly regardless of the underlying implementation languages.

Key Takeaways

- MCP innovates through language-agnostic architecture that bridges different programming environments

- The standardized protocol enables seamless tool integration without reimplementation

- Community adoption has created a growing ecosystem of shared servers

- Implementing MCP servers is straightforward with decorator-based syntax

- MCP enables AI systems to access specialized capabilities while maintaining a clean architectural separation