In our previous post, we explored how generative AI tools can assist in turning Figma designs into front-end code. While these tools still have limitations, they show promising progress in automating repetitive tasks and speeding up development.

For this phase of the experiment, we decided to push the tools further. Our goal was to test how much we could improve their outputs using detailed prompts, evaluate their ability to handle complexity, and identify when it’s better to switch back to manual coding.

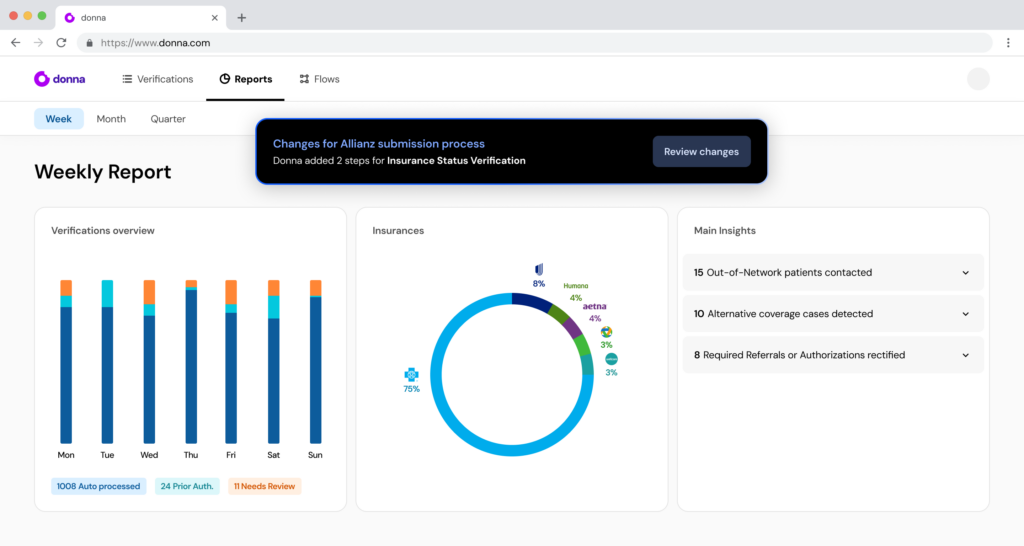

To make things more challenging, we added a new screen with complex elements, including floating components, charts, and accordions, to assess how well the tools could connect the two screens and if it can detect and reuse shared components.

V0:

Using prompts to refine the output improved the initial screen significantly. Visual fidelity improved, and the design became more responsive.

However, V0 struggled with technical execution. When asked to add a new page to the existing project, it deleted the previous work and started from scratch.

The new screen results were mixed. While charts were well-rendered, collapsible elements and toast notifications fell short. Moreover, V0 didn’t seem to learn from earlier iterations, often forgetting consistent components like headers.

Another drawback is the token limit in the free version, which restricts productivity. The premium version offered more tokens but didn’t meet expectations in terms of added features. We were expecting more from the direct Figma export capability.

Bolt:

Bolt required careful configuration to produce accurate results. While the learning curve was steep, once properly set up, the tool delivered outputs that were both visually accurate and technically consistent. With less prompts we got something that was even more accurate than other tools.

Bolt’s strength lies in its ability to display the entire project setup, making it easier to identify and fix technical errors—a valuable feature for integrating AI-generated code into live projects.

However, results with the second screen were mixed. While Bolt maintained consistency with previous designs, some visual components didn’t meet expectations. On the technical side, though, the generated code was solid and practical.

Cursor:

Cursor required multiple prompts and iterations to refine the first screen, delivering results on par with its competitors in terms of visuals. Its main advantage lies in practicality: the tool integrates directly into the development environment, enabling immediate adjustments to AI-generated code and carrying those improvements forward in subsequent iterations.

Where it really did shine was in the more complex screen. The accuracy in the chart elements, the proper connection with the previous screen, and the ability to make the most accurate floating alert with a very good code structure to work it with got me gagging.

After testing these tools in a similar way, I felt more inclined to use Cursor. I felt it was the most practical of them. With minimal prompting, it produced functional elements like the tabs to filter by status, the search bar, the dropdowns, and the tooltips. The routes are linked too, making it a strong choice for iterative development.

Conclusions

For the three options evaluated, Cursor demonstrated the greatest potential. Its code-oriented approach ensures high-quality, modular outputs that adapt well to project-specific requirements. Although Bolt and V0 have their strengths, Cursor’s practical integration into the development tools makes it the most versatile option.

Let’s review our criteria and how good we consider Cursor to be:

Fidelity to Design: Did the resulting code visually match the original Figma prototype?

Cursor was competitive, matching the design fidelity of more design-oriented tools. There is room for improvement, but it’s a good way to speed up our work

Code Quality: Was the generated code clean, modular, and easy for developers to work with?

It delivered clean, modular code that was easy to build upon.

Responsiveness: Could the tools handle adaptive layouts for various devices?

Cursor handled adaptive layouts effectively, especially for complex designs.

Time Savings: Did the tools genuinely speed up the process compared to manual coding?

The tool sped up the initial development process significantly, especially in functional aspects.

What are the ideal scenarios to use these Generative AI tools?

I would use these tools to generate simple component or page designs. They work well as a starting point, providing a clean base of code that developers can expand upon. For example, creating a static header or a straightforward layout is where these tools shine.

However, as designs become more intricate or require specific interactions, manual coding is often the better choice. GenAI tools struggle to fully replicate complex behaviors or custom logic without significant guidance.

Cursor’s wider limit of tokens also allows for longer, iterative improvement cycles, which can be valuable for refining prototypes and continuing to speed up the development process in the functional aspect.

If you’re still a bit of a beginner in converting a design mockup into quality functional code, these tools can also serve as educational resources. Cursor not only helps you do a good structure, but also explains to you what it did and the reason behind it, so you can learn best practices in the process.

Have you already tried any similar tool? What was your experience? – Also, if you like reviewing new development approaches in a hands-on way, you might be a good candidate for our innovation team. Check our open positions 😉