AI’s promise often leads us down a familiar path: automating entire processes in the hope of increased efficiency. But as many discover, this approach presents significant challenges. Fully automating complex tasks is not only hard to implement correctly but also struggles to build user trust. I believe we, as product shapers, have to pay more attention to the idea of designing the level of agency—carefully deciding where AI should act independently, where it should assist, and where humans must remain in control. This shift from automation to augmentation emphasizes human-centered design and opens new doors for thoughtful AI integration.

Automation vs. Augmentation: The Core Challenge

At its core, automation focuses on independence, delegating entire processes to AI. The current, most established form of AI is currently machine learning, which pursues training models to reproduce tasks from previous experience (e.g. labeled data). Therefore, this is in general the first idea that comes to mind when thinking about AI implementation. While this sounds ideal from both a business and technical perspective, it often leaves users feeling excluded, disconnected, and distrustful. Systems that lack transparency or fail to involve users in critical decisions risk creating friction rather than simplifying workflows. On the other hand, augmentation enhances human abilities, enabling users to work smarter and make informed decisions.

Ben Shneiderman, in Human-Centered AI, highlights this distinction. He argues that while automation can bring convenience, Human-Centered AI (HCAI) must prioritize reliability, safety, and trustworthiness. This means giving users the tools they need to collaborate effectively with AI while retaining control over key decisions.

Interested in learning more about how to bring this topic to a discussion or workshop? I’ve previously written about this when commenting on how we define Automation vs Augmentation opportunities in Healthcare

In a recent discussion with Joana Cerejo, a seasoned expert in UX and AI design who was talking about Automation vs Augmentation in 2021, we explored the tension between automation and augmentation. She emphasizes that designing AI systems from a human-centered perspective is critical to ensuring they not only function well but also earn the trust and acceptance of users. This underscores the need for thoughtful consideration of the agency level in AI design.

Designing the Right Agency Level: A Human-Centered Approach

Creating AI that works with users rather than for them requires carefully crafting its level of agency. This approach ensures that AI enhances human capabilities while maintaining user trust. As Carlos Toxtli explains in his paper, Human-Centered Automation (2024), fostering effective collaboration between humans and machines is critical. AI should leverage its computational strengths, but humans bring unique judgment, creativity, and ethical considerations.

Toxtli provides key principles for designing human-centric automation systems, that I believe are worth being spread through all kinds of product shapers communities:

- Adapt to User Needs: AI systems should dynamically adjust to the preferences, expertise, and goals of their users.

- Provide Intuitive Interfaces: Interactions with AI must be seamless and easily understood, removing barriers to effective use.

- Leverage AI Capabilities Without Overstepping: AI should support users by amplifying their strengths, not replacing them.

- Ensure Reliability and Transparency: Systems must be explainable, predictable, and trustworthy to earn user confidence.

- Encourage Collaboration: Design systems that foster human-AI teamwork, enabling each to contribute their unique strengths.

Let’s explore an example and the discussions it sparked. At Arionkoder, we are experimenting with the concept of agency level in a project aimed at reducing the administrative burden in healthcare using AI agents. This work explores not only agentic systems, but also how to balance automation and augmentation, ensuring the AI enhances workflows without overwhelming users.

Through interviews with users and subject matter experts, we identified key challenges. Nicole Cook, founder of Alvee, shared that healthcare providers are often hesitant to adopt disruptive technologies. She emphasized starting with small, specific automation tasks and expanding gradually to build trust and demonstrate benefits. This insight informed our design, leading to features like agents whose level of automation adapts to the practice’s needs while offering full transparency, ensuring users retain control.

Users valued these elements of transparency and control. However, they remained skeptical about whether the agents could handle real-world complexities, such as outdated systems, manual workflows, and multi-stakeholder environments. This feedback highlights the need for AI tools that support users rather than attempting to “automate away” their intervention.

Could Progressive Automation Build Trust Over Time?

Internally, we’ve also reflected on whether this trust issue stems from AI being a relatively new technology. Similar to how users needed time to adapt to the internet or mobile phones, trust in AI may take time to develop. This “time to adapt” is a critical human need, and designing the “agency” of AI systems from a human-centered perspective must consider this.

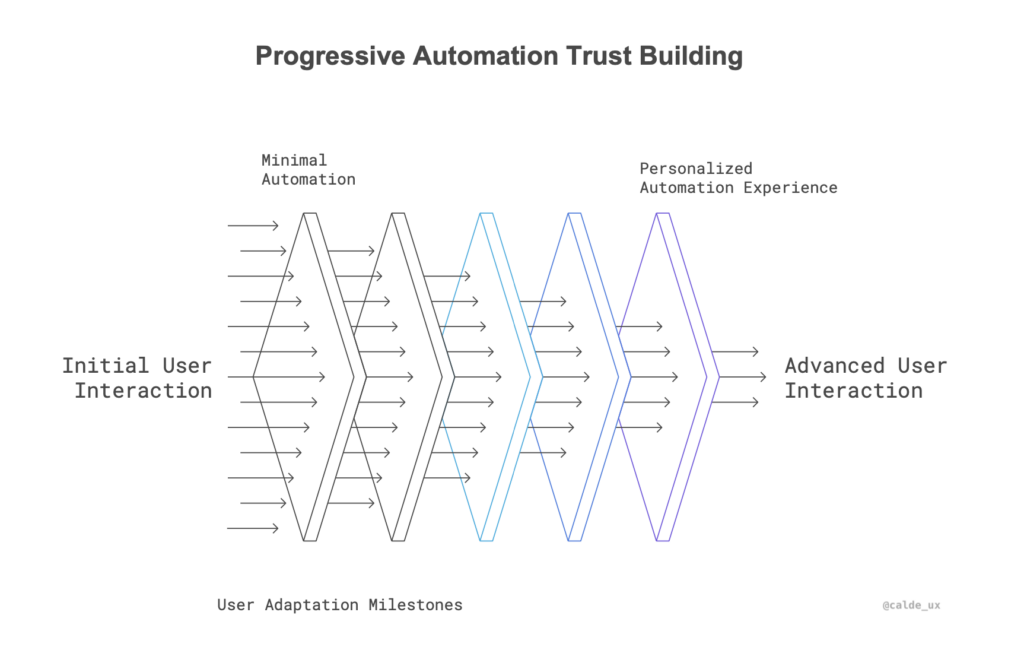

Building on this, we’ve been exploring an idea: could progressive automation—starting with minimal automation and increasing it gradually—help build trust and create more dynamic AI systems? This approach mirrors how humans naturally learn and adapt, beginning with manual effort and automating tasks as confidence and familiarity grow. By tailoring automation levels to user behavior and needs, AI systems might be able to foster trust over time while delivering increasingly personalized experiences.

But this raises questions. Would users find gradual automation too limiting at first, expecting more advanced functionality upfront? And how can systems adapt seamlessly to individual needs without feeling inconsistent? There’s also the matter of personalization: while it offers exciting possibilities, it depends on collecting and analyzing user data over time, which brings up important considerations around privacy and ethics.

These are open questions we’re considering as we refine our approach. While still in its early stages, this exploration is helping us think differently about how AI systems -and their potential to adapt- can evolve alongside their users. We’re still progressing on this project and will share more comprehensive insights as we continue to refine our ideas. Stay tuned.

A Call to Action: Designing for a Human-Centered Future

If AI is to fulfill its promise, designers, researchers, and practitioners must move beyond automating for automation’s sake. The future lies in creating technologies that adapt to human needs, empower users, and amplify the strengths of both humans and machines. We must collectively focus on developing systems that are not only functional but also human-centered in every dimension.

For me, the design of AI systems centers on a key challenge: How do we define the right level of agency humans need from the AI system? By taking ownership of this question, product shapers have the opportunity to craft AI that doesn’t just automate tasks but enhances how we live, work, and innovate. As we refine this approach, let’s focus on systems that respect human abilities, foster trust, and empower collaboration—because the future of AI isn’t about machines; it’s about the people they’re built to benefit.

Reach out to us at [email protected] to explore how human-centered AI can help you transform the experience of your users.